Collaborative Learning Experimentation Testbed

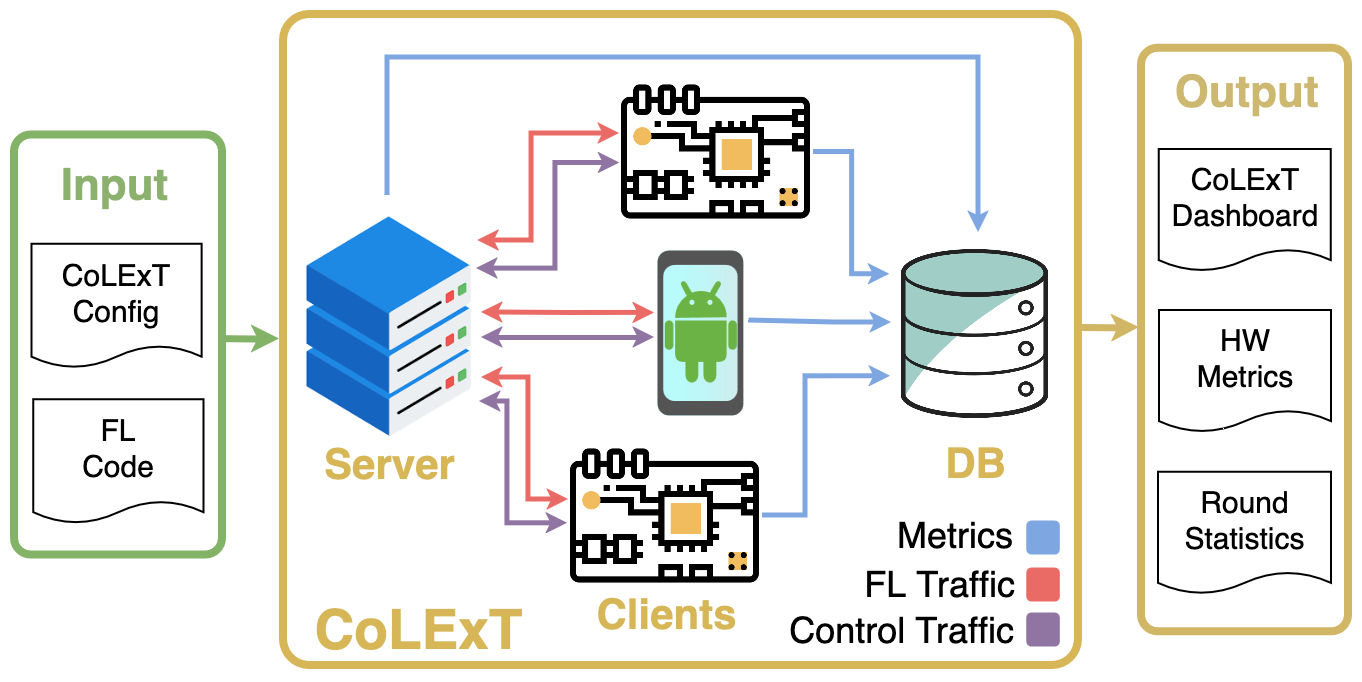

CoLExT is a Collaborative Learning Testbed built for machine learning researchers to realistically execute and profile Federated Learning (FL) algorithms on real edge devices and smartphones. To facilitate experiments, CoLExT comes with a software library that seamlessly deploys and monitors FL algorithms developed with Flower, a popular FL framework.

For an overview on CoLExT, please continue reading. For a detailed description, please see our paper. We’ve open sourced our system design and setup so that others can build their own testbed, or even connect their testbed with ours, expanding the device pool. If you’d like to experiment with CoLExT, please show your interest by filling out this form.

The need for real deployments

Despite there being vast research on FL, most research is done in simulated environments, where device limitations may be overlooked, and metrics such as energy consumption cannot be accurately estimated. The largest hurdle to on-device evaluation is the inaccessability of these devices and the difficulty of conducting real-world experiments over a variety of client devices with different platforms while collecting various dimensions of algorithm performance.

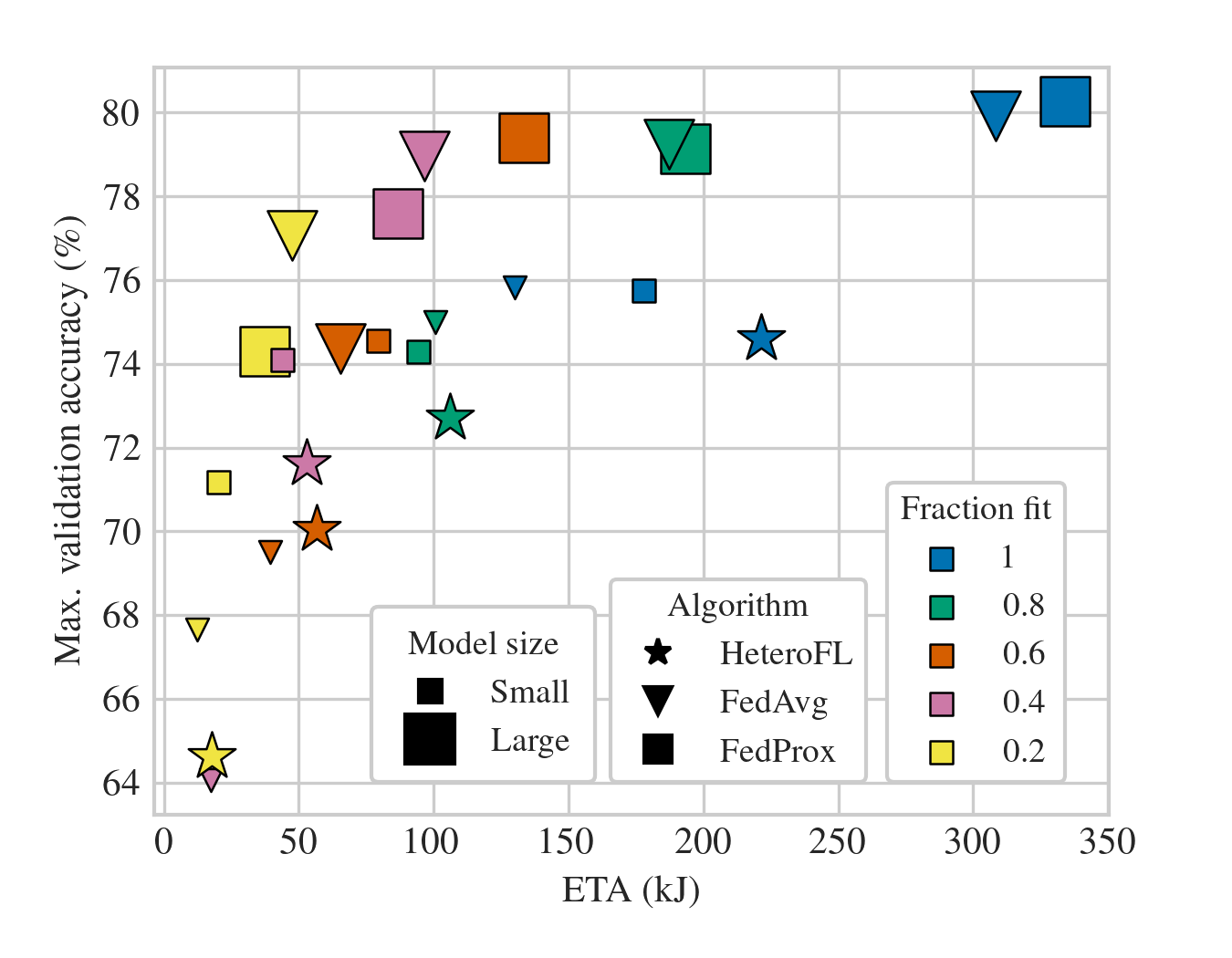

CoLExT provides one of the first Collaborative Learning Testbeds where researchers can easily deploy and run cross-device FL algorithms on real devices while a plethora of metrics including accuracy, energy consumption, and time to convergence is automatically collected. The figure below illustrates how CoLExT enhances FL algorithm evaluation. Here we experiment with 3 FL methods varying the model sizes and client participation ratio per training round.

Simulation-based assessments would only compare algorithms based on their accuracy and would identify FedProx with a large model and full client participation as the most promising solution (top-right blue square). CoLExT, on the other hand, uncovers other metrics, such as CPU utilization, training duration, and energy usage. In the figure, CoLExT reveals that the amount of energy needed for reaching a particular level of accuracy (i.e., energy-to-accuracy, ETA) differs drastically among points that achieve very similar accuracy. Thus, while the previously identified FedProx configuration reaches the top accuracy, it consumes 3x more energy for a mere 4% increase in accuracy when compared to the FedAvg configuration using 40% client participation (top-left pink triangle).

Current CoLExT setup

- 28 Single Board Computers (SBC)

- Orange Pi, LattePanda, Nvidia Jetson

- 20 Smartphones

- Samsung, Xiaomi, Google Pixel, Asus ROG, One Plus

- High Voltage Power Meter

- Wired and wireless networking

- Workstation - FL Server

Interacting with CoLExT

CoLExT has been designed with simplicity in mind. Our current setup, assumes the user has developed an FL algorithm using the Flower framework. Under this setup, here are the steps required to start interacting with the testbed:

-

Install our Python package (under development)

$ python3 -m pip install colext -

Add CoLExT decorators to the Flower client and strategy.

from colext import MonitorFlwrClient, MonitorFlwrStrategy @MonitorFlwrClient class FlowerClient(fl.client.NumPyClient): [...] @MonitorFlwrStrategy class FlowerStrategy(flwr.server.strategy.Strategy): [...] -

Write a CoLExt configuration file:

colext_config.yaml.

CoLExT exposes environment variables with experiment information.code: client: entrypoint: "client.py" args: - "--server_addr=${COLEXT_SERVER_ADDRESS}" - "--client_id=${COLEXT_CLIENT_ID}" server: entrypoint: "server.py" args: "--n_clients=${COLEXT_N_CLIENTS} --n_rounds=3" devices: - { dev_type: JetsonAGXOrin, count: 2 } - { dev_type: JetsonOrinNano, count: 4 } - { dev_type: LattePandaDelta3, count: 4 } - { dev_type: JetsonNano, count: 6 } monitoring: scrapping_interval: 0.3 # in seconds -

Specify your Python dependencies using a

requirements.txt -

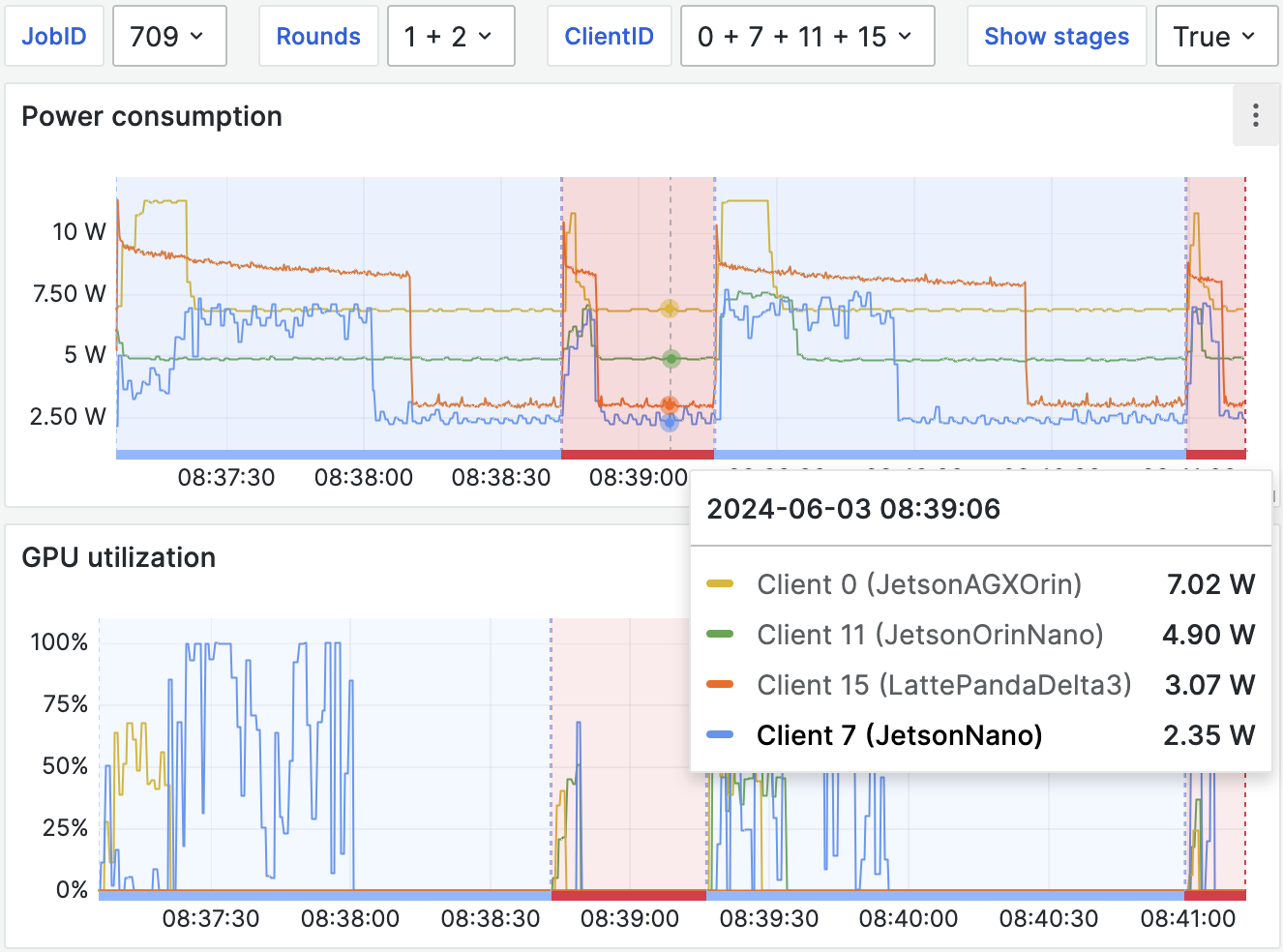

Deploy, monitor in real time, and download the performance metrics.

$ colext_launch_job # Prints a job-id and a dashboard link. # A snippet of the dashboard can be seen in the figure below. # After the job finishes, metrics can be retrieved as CSV files. # On retrieval, CoLExT also generates plots from these metrics. $ colext_get_metrics --job_id <job-id>

Behind the scenes

- Automatic containarization

- Each experiment has their own isolated environment

- Done automatically to hide the details of different architectures and OS

- Deploying and orchestraing experiments

- For SBCs:

- The testbed forms a Kubernetes cluster

- Kubernetes orchestrates the deployment of clients and server

- For Smartphones:

- Deployment is managed using Android Debug Bridge

- Orchestration is handled directly from the

colextpackage

- For SBCs:

- Automatic performance metrics

- User code is not modified to collect performance metrics

- Performance metrics are anchored on Flower API

Current limitations

- Maximum supported Flower version is 1.6

- We plan to support more recent version soon

- No automatic code containerization for smartphones

- Custom Kotlin code is required

- On smartphones the only supported ML framework is Tensorflow Lite

- Smartphones and SBCs cannot be used in the same experiment.

- Different serialization between the two device categories prevent this

- Dataset management

- Currently, datasets must always be downloaded and partitioned

- A way to do this is using Flower Datasets

- This limitation is aliviated through the use of shared dataset caches

Future work

The testbed development has only just began. We currently have a total of 48 devices, but we envision scaling the FL testbed to over 100 devices. This value can grow even further if we merge our FL testbed with other similar testbeds from other institutions.

This project benefited tremendously from the contributions by: Janez Bozic, Amandio Faustino, Veljko Pejovic, Marco Canini, Boris Radovic, Suliman Alharbi, Abdullah Alamoudi, Rasheed Alhaddad.